After the Facebook Privacy Debacle, It’s Time for Clear Steps to Protect Users

We learned last weekend that a trove of personal information from 50 million people — one in three U.S. Facebook users — was harvested for an influence and propaganda operation led by Cambridge Analytica, a company later used by the Trump campaign. Was Facebook hacked? Nope. All of this personal information was accessed through the Facebook “app gap,” a major privacy hole in Facebook’s app platform that the ACLU had been challenging since 2009, when we showed how Facebook quizzes posed this threat.

On Wednesday, Facebook promised to make more changes to prevent this from happening again. But there is still more it — and our government — can do to protect our privacy.

First, let’s understand what really happened. An organization called Global Science Research (GSR), run by researchers in the U.K., released an online personality quiz app on Facebook. The app collected personal data not only about the 270,000 people who were paid to use the app and agreed to share information, but also about all their Facebook friends without those friends’ knowledge.

At the end of the day, the app ended up with data on 50 million U.S. Facebook users, including sensitive information about the articles, posts, and pages “liked” by those users. GSR then handed that data to Cambridge Analytica, which used the data to build detailed psychological profiles of the users for political purposes, and whose customers included the presidential campaigns of Sen. Ted Cruz (R-Texas) and Donald Trump.

There’s been some debate over whether this is a “data breach,” but for the most part that is a red herring. If anything, this is arguably worse than the result of an inadvertent technical failure. Instead, it was a predictable outcome of the choices that Facebook has made to prioritize the bottom line over user privacy and safety.

Whatever you call it, misuse of data by app developers was hardly an unknown threat. In 2009, the ACLU wrote:

Even if your Facebook profile is “private,” when you take a quiz, an unknown quiz developer could be accessing almost everything in your profile: your religion, sexual orientation, political affiliation, pictures, and groups. Facebook quizzes also have access to most of the info on your friends’ profiles. This means that if your friend takes a quiz, they could be giving away your personal information too.

In 2016, the ACLU in California also discovered through a public records investigation that social media surveillance companies like Geofeedia were improperly exploiting Facebook developer data access to monitor Black Lives Matter and other activists. We again sounded the alarm to Facebook, publicly calling on the company to strengthen its data privacy policies and “institute human and technical auditing mechanisms” to both prevent violations and take swift action against developers for misuse.

Facebook has modified its policies and practices over the years to address some of these issues. Its current app platform prevents apps from accessing formerly-available data about a user’s friends. And, after months of advocacy by the ACLU along with the Center for Media Justice and Color of Change, Facebook prohibited use of its data for surveillance tools.

But Facebook’s response to the Cambridge Analytica debacle demonstrates that the company still has significant issues to resolve. It knew about the data misuse back in December 2015 but did not block the company’s access to Facebook until hours before the current story broke. And its initial public response was to hide behind the assertion that “everyone involved gave their consent,” with executives conspicuously silent about the issue. It wasn’t until Wednesday that Mark Zuckerberg surfaced and acknowledged that this was a “breach of trust between Facebook and the people who share their data with us and expect us to protect it” and promised to take steps to repair that trust and prevent incidents like this from occurring again.

Zuckerberg is absolutely right that Facebook needs to act. In fact, it should have acted long ago. Here are a few steps that Facebook could have taken — and, in some cases, still should take — to prevent or address this incident:

1. Put users completely in control over sharing with apps

Facebook users should have complete control over whether any of their information is shared with apps. Right now, that’s not the case, and it does not appear that the planned changes will fix that.

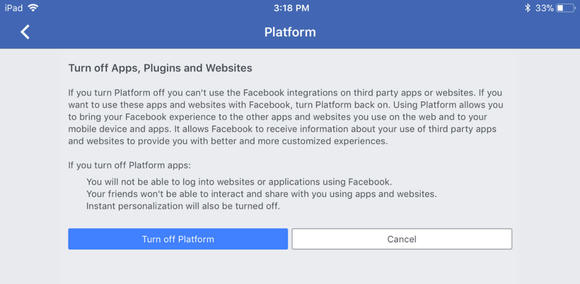

Facebook has declared some types of information, including users’ name and profile picture, “publicly available” — and has made all of that information available to each user’s friends’ apps as well. The only way to prevent this data sharing is to exercise the “nuclear option” of turning off all app functionality. As a stern warning from Facebook explains, this prevents you from using Facebook for a whole list of purposes, including logging into other websites or applications.

As a practical matter, this gives privacy-concerned users two lousy options. They can either forego using any apps they personally select and trust or allow any app installed by their friends to access information about them. Facebook should give users a meaningful ability to opt out of sharing all information, including “public information,” with “apps others use” without disabling apps entirely.

2. Enhance app privacy settings

Facebook needs to ensure that users truly understand and control how it shares data with apps. The company has promised to do so, but of course the devil is in the details.

One positive step that Facebook is taking is making sure that app permissions expire. Until now, Facebook has granted apps access to information about any user who has EVER accessed the app; now, the company states that it will cut off access if a user does not use the app for three months. In addition, Facebook needs to clarify and redesign its app settings, particularly the “apps others use” settings that, frankly, we don’t understand at all. Whatever those settings do, they should protect privacy by default.

3. Notify users of any misuse of their data

Facebook has promised that it will make sure that users are fully notified of any misuse of their data, past or present. This is a major improvement over the company’s history, including their initial handling of this incident.

Because Facebook claimed that the Cambridge Analytica incident was not a “data breach” in the legal sense, it has not provided notice to users whose data was accessed; the company has promised to change that and notify all affected users. This is the correct policy. Whether data was inappropriately obtained or misused through a hack, a contractual violation, or some other mechanism is a distinction without a difference from the perspective of a Facebook user. And Facebook should continue to develop and improve tools to help users understand which third parties access their data at all.

4. Build stronger auditing and enforcement to ensure that its developer policies are followed

If Facebook is going to rely on its developer policies to prevent harmful outcomes, it needs to ensure that those policies are actually followed. Facebook has again promised to take steps in this direction, but the company needs to follow through this time.

First and foremost, Facebook needs to invest resources and effort into identifying violations. This is not the first time that a developer has violated Facebook’s policies, as reports of developers abusing Facebook apps first surfaced years ago. But far too often, these abuses seem to be detected through whistleblowers or external research rather than by Facebook itself. As we noted to The Washington Post in 2016, the “ACLU shouldn’t have to tell Facebook … what their own developers are doing.” Mr. Zuckerberg has stated that Facebook will “investigate all apps that had access to large amounts of information” and “conduct a full audit of any app with suspicious activity,” which sounds promising but will only be effective with the technical tools and personnel to carry that out. In addition, if Facebook learns of an abuse or violation, it has pledged to ban the offender. This is a much better approach than their response to Cambridge Analytica, which continued to have access to the Facebook Platform until last week.

If Facebook had put these protections into place years ago, the personal information of 50 million Facebook users may never have been exploited. Instead the company is staring down government investigations, its stock price has plummeted, and users are clamoring to #deleteFacebook. Facebook’s promised changes are a good start, though there is more they could do voluntarily. But we also need to ensure that government agencies like the Federal Trade Commission hold companies accountable (especially companies like Facebook who are already subject to a settlement agreement based on previous privacy violations), and we need Congress and other lawmakers to strengthen privacy protections. We hope that other companies will take this opportunity to learn from Facebook’s privacy missteps and avoid making the same mistakes.

For real-life case studies and tips for businesses on privacy and speech, see this ACLU primer.

Stay informed

Sign up to be the first to hear about how to take action.

By completing this form, I agree to receive occasional emails per the terms of the ACLU's privacy statement.

By completing this form, I agree to receive occasional emails per the terms of the ACLU's privacy statement.